Shuai Zhang

|

|

Assistant Professor |

About me

I joined NJIT from Rensselaer Polytechnic Institute (RPI), where I was a postdoctoral researcher focused on theoretical and algorithmic foundations of deep learning. I received my Ph.D. from the Department of Electrical, Computer, and Systems Engineering (ECSE) at Rensselaer Polytechnic Institute (RPI) in 2021, supervised by Prof. Meng Wang. I received my Bachelor's degree in Electrical Engineering (EE) at the University of Science and Technology of China (USTC) in 2016. My interests span deep learning, optimization, data science, and signal processing, with a particular emphasis on learning theory – the design of machine learning algorithms – as well as the development of efficient and trustworthy AI.

I am always looking for self-motivated students (PhD with RA/TA support, Masters, undergrads) with interests in data science, machine/deep learning, and signal processing.

Interested candidates are strongly encouraged to contact me by sz457 at njit.edu, together with the resume and transcripts.

Research

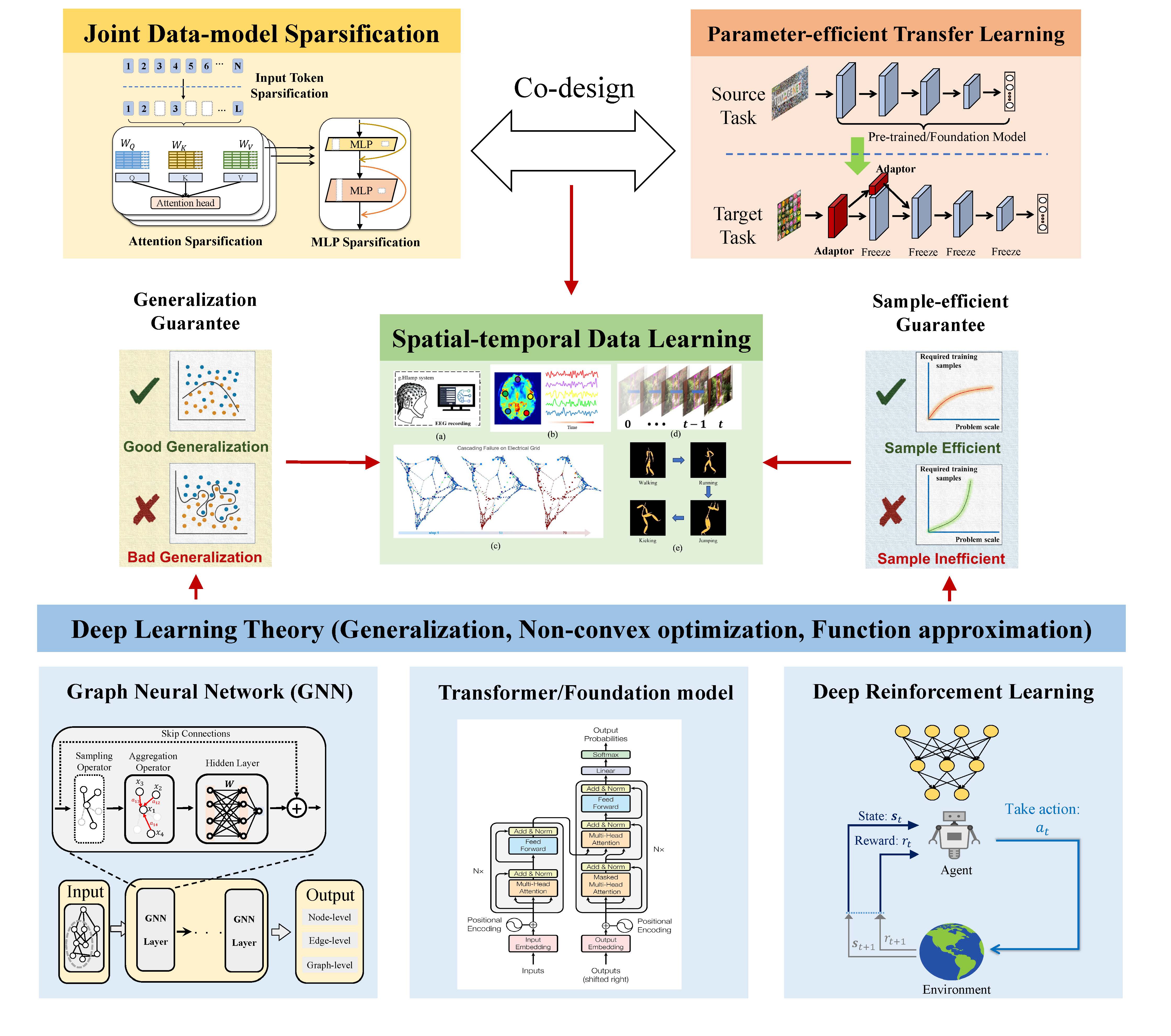

As AI continues to advance, it is imperative to address several challenges to ensure not only the widespread adoption but also the safe and efficient implementation of this technology. These challenges include the high cost of computation, the limited availability of high-quality labeled data, generalizability across domains, and the lack of transparency – specifically, the absence of explanations for how AI works. My long-term research objective is to study the theoretical foundations of artificial intelligence and design more principled and efficient algorithms for better, safer, and more efficient AI applications. As part of my research, my interests cover the following areas:

Machine/Deep Learning

Learning Theory

High-dimensional Data Analysis

Spatio-temporal Data Analysis

Deep Reinforcement Learning

Probability and Statistical Inference

My current research interests lie in advancing the theoretical foundations of Large Language Models, including the analysis of learning paradigms, e.g., contrastive learning and in-context learning, as well as the study of emerging efficient neural network architectures such as Transformer and Mamba. I am also interested in the algorithmic foundations of parameter-efficient transfer learning and sparse learning, particularly for applications involving spatiotemporal data. Thank you, NSF!

Recent news (Last updated on 1/26/2026)

Jan. 2026: Two papers were accepted at ICLR. Congratulations to Haixu and Mugunthan on their first papers as lead authors. Links will be provided soon.

Jan. 2026: One paper on a new parameter-efficient fine-tuning method, termed visual prompting, was accepted at AISTATS.

-

Jan. 2026: One paper on using in-context learning for mid-term load forecasting was accepted at IEEE Transactions on Power Systems. [IEEE Xplore]

Oct. 2025: One paper on gradual domain adaptation was accepted at ACMMM2025.

Sep. 2025: Two papers on "Theoretical Analysis of Contrastive Learning with modality misalignment" and "Self-training in Improving Domain Adaptation" were accepted in NeurIPS.

Sep. 2025: One paper, “Is FISHER All You Need in The Multi-AUV Underwater Target Tracking Task?” was accepted in IEEE TMC (Transactions on Mobile Computing). It will appear soon.

Jun. 2025: Two papers were accepted in IROS.

Feb. 2025: Glad to receive funding from NSF CISE-CNS in collaboration with Prof.Songyang Zhang at ULL. The proposal will be focused on spatiotemporal graph-structured data processing!

Jan. 2025: One paper on task vectors in machine unlearning was accepted to the ICLR, 2025!

Jan. 2025: One paper on Unmanned underwater vehicle was accepted to IEEE Internet of Things Journal!

Dec. 2024: Two papers were accepted to the ICASSP!

Nov. 2024: One paper was accepted to IEEE Sensor Array and Multichannel Signal Processing (SAM)!

October 2024: Excited to serve as the editor of the book Generative AI in Wireless Communication, published by Elsevier! Expected release: October 2025.

Sep. 2024: Welcome, Haixu and Mugunthan, to the AOE (Algorithmic and Optimization Foundations of Efficient Learning) Lab!

May. 2024: Glad to receive the NJIT seed Grant!

May. 2024: Two papers on Federated Contrastive Learning and Transfer Reinforcement Learning via Successor Feature were accepted to the ICML 2024!

Sep. 2023: Our paper "On the Convergence and Sample Complexity Analysis of Deep Q-Networks with -Greedy Exploration" is accepted to the NeurIPS 2023!

Aug. 2023: I joined NJIT as an Assistant Professor.

Apr. 2023: One paper has been accepted at ICML 2023.

Jan. 2023: Our paper on Joint Sparse Learning for Graph Neural Networks is accepted to the International Conference on Learning Representations (ICLR) 2023!

Nov. 2022: Invited talk for the Electrical, and Computer Engineering Seminar at Iowa State University.

Mar. 2022: Paper with Hongkang "Learning and generalization of one-hidden-layer neural networks, going beyond standard Gaussian data" is accepted to 56th Annual Conference on Information Sciences and Systems (CISS), 2022.

Jan. 2022: Our paper "How unlabeled data improve generalization in self-training? A one-hidden-layer theoretical analysis" is accepted to the 10th International Conference on Learning Representations (ICLR) 2022!

Dec. 2021: Shuai successfully defended his thesis. Thanks to Drs. Meng Wang, Ali Tajer , John E. Mitchell , and Birsen Yazıcı for serving as the commitee members.

Sep. 2021: Our paper "Why Lottery Ticket Wins? A Theoretical Perspective of Sample Complexity on Sparse Neural Networks" is accepted to the Thirty-fifth Conference on Neural Information Processing Systems (NeurIPS) 2021!

May 2021: Shuai won the Allen B. DuMont Prize from the Department of ECSE at RPI (The award is presented to outstanding doctoral graduates, and two students for the Department of ECSE at 2021).

Jun. 2020: Our paper "Improved Linear Convergence of Training CNNs with Generalizability Guarantees: A One-hidden-layer Case" is accepted to IEEE Transactions on Neural Networks and Learning Systems (TNNLS).

Jun. 2020: Our paper "Fast Learning of Graph Neural Networks with Guaranteed Generalizability: One-hidden-layer Case" is accepted to International Conference on Machine Learning (ICML) 2020.

Sep. 2019: Shuai received Rensselaer's Founders Award of Excellence.

Feb. 2019: Our paper "Correction of Corrupted Columns in Robust Matrix Completion by Exploiting the Hankel Structure" is accepted to IEEE Transactions on Signal Processing (TSP).

Mar. 2018: Our paper "Correction of Simultaneous Bad Measurements by Exploiting the Low-rank Hankel Structure" is accepted to IEEE International Symposium on Information Theory (ISIT) 2018.

Mar. 2018: Paper with Yingshuai "Multi-Channel Hankel Matrix Completion through Nonconvex Optimization" is accepted to IEEE Journal of Selected Topics in Signal Processing (JSTSP)!

Sep. 2017: Paper with Yingshuai "Multi-Channel Missing Data Recovery by Exploiting the Low-rank Hankel Structures" is accepted to IEEE International Workshop on Computational Advances in Multi-Sensor Adaptive Processing (CAMSAP) 2017.